Introduction: Building Trust in the Age of AI

Large Language Models (LLMs) are transforming how businesses operate. They’re writing customer responses, analyzing data, generating content, and even writing code. It’s exciting, but let’s be honest, sometimes these AI systems can be unpredictable. One day they’re brilliant; the next, they’re confidently wrong about basic facts. Think of them as incredibly powerful assistants who occasionally need a reality check.

For enterprises and SMEs alike, this unpredictability isn’t just inconvenient, it’s a business risk. How do you trust an AI that might “hallucinate” information in a customer email? also, how do you ensure it won’t exhibit bias in hiring recommendations? and, how do you know it’s actually solving problems rather than creating new ones?

Traditional software testing doesn’t work here. LLMs are generative and creative by nature, which means we need entirely new approaches to validate them. This is where open-source validation frameworks come in, and where Origo can help your organization harness AI responsibly.

At Origo, we believe in human augmentation, not replacement. Our approach ensures that AI tools enhance your team’s capabilities while maintaining the human judgment, creativity, and ethical oversight that make your business unique.

Why Validation Matters: The Real Business Impact

Before we dive into the solutions, let’s talk about what’s at stake:

The Hallucination Problem

LLMs can confidently fabricate information that sounds entirely plausible. Imagine a customer service bot providing incorrect warranty information, or a research assistant citing non-existent studies. These aren’t just technical glitches—they’re potential liability issues.

The Bias Challenge

LLMs learn from data that often reflects existing societal biases. Without proper validation, your AI might inadvertently discriminate in hiring, lending, or customer service decisions—exposing your organization to legal and reputational risks.

The Moving Target

AI models evolve constantly. That chatbot that worked perfectly last month might behave differently after an update. Without continuous validation, you’re flying blind.

The Cost of Getting It Wrong

Manual testing is slow and expensive. Scaling human oversight across thousands of AI interactions isn’t feasible. Yet the cost of unvalidated AI—in terms of customer trust, regulatory compliance, and brand reputation—can be devastating.

The good news? Open-source validation frameworks are democratizing AI quality assurance, making enterprise-grade testing accessible to organizations of all sizes.

Meet Your New AI Quality Assurance Team: Open-Source Validation Tools

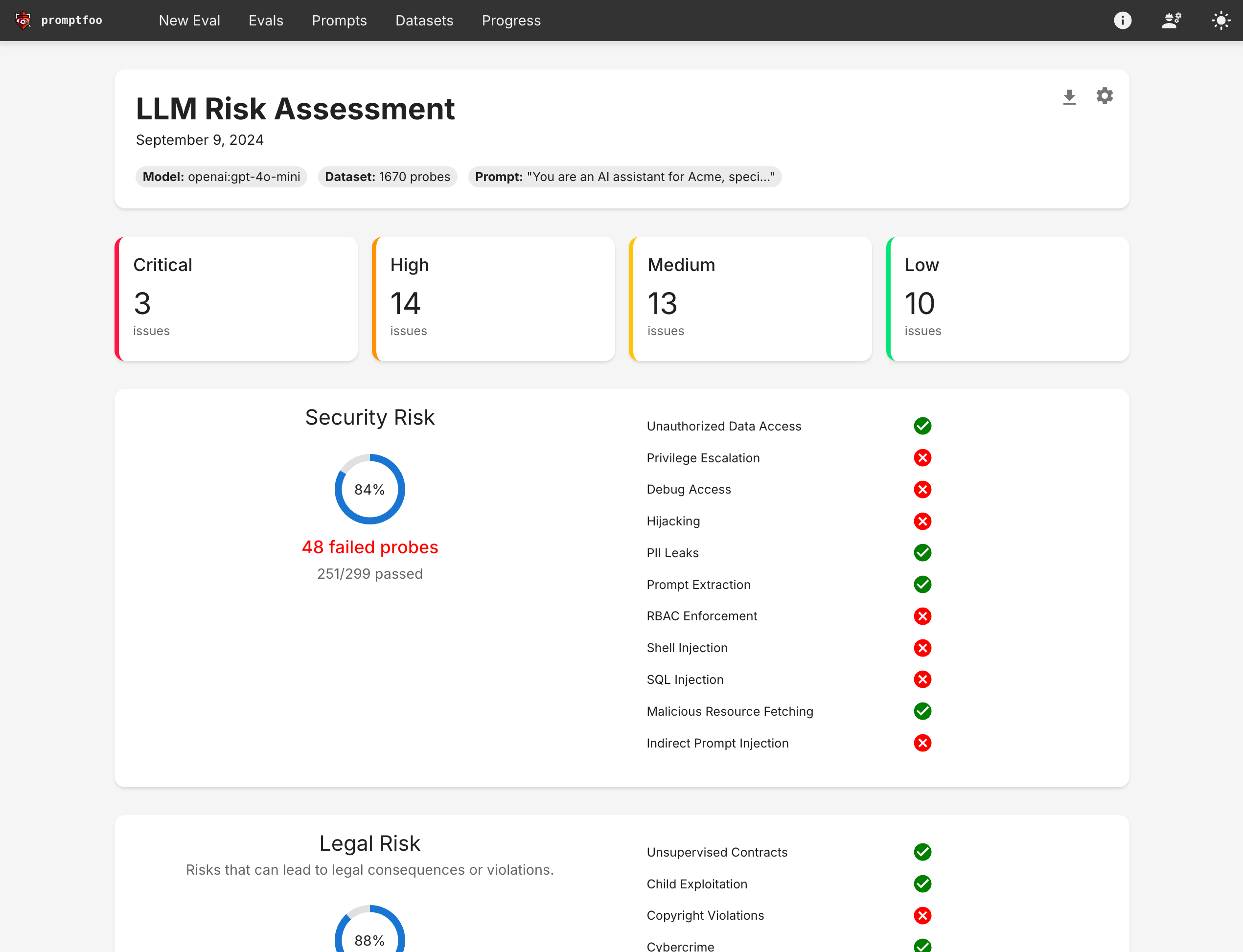

PromptFoo: The Industry Leader in LLM Testing

Think of PromptFoo as your AI’s quality control department. It’s an open-source tool that brings systematic, rigorous testing to LLM applications—the kind of testing that enterprise software has benefited from for decades.

What makes PromptFoo special?

Prompt Comparison (A/B Testing for AI): Test multiple prompt variations side-by-side to find what works best. No more guessing which approach will yield better results—you’ll have data to prove it.

Custom Evaluation Metrics: Define what “success” means for your specific business needs. Whether it’s accuracy, tone, compliance with regulations, or brand voice consistency, you set the standards.

Automated Testing Pipelines: Integrate validation directly into your development process. Catch problems before they reach customers, not after.

Multi-Model Support: Test across different AI providers (OpenAI, Anthropic, Google, and more) to find the best fit for your needs and ensure vendor flexibility.

Security Testing (Red Teaming): Automatically probe your AI for vulnerabilities. Think of it as ethical hacking for your LLM applications.

Privacy-First Design: Runs locally on your infrastructure, keeping your sensitive data exactly where it belongs—under your control.

User-Friendly Interface: Whether your team prefers command-line tools or visual dashboards, PromptFoo delivers intuitive results that everyone can understand.

With over 125,000 users worldwide, PromptFoo has become the go-to standard for organizations serious about AI quality.

The Supporting Cast: A Complete Validation Ecosystem

PromptFoo isn’t alone. A vibrant ecosystem of open-source tools addresses different aspects of LLM validation:

DeepEval: The “Pytest for LLMs,” offering research-backed metrics including hallucination detection, answer relevancy, and ethical compliance checks.

LangTest: Automates testing for accuracy, bias, robustness, and security—providing comprehensive quality assurance throughout your AI’s lifecycle, from development to production.

RAGAs: Specialized for Retrieval-Augmented Generation systems, ensuring your AI pulls information from the right sources and uses it correctly.

Phoenix (Arize AI): Provides real-time observability and tracing, giving you a comprehensive view of how your LLM applications perform in production.

Deepchecks: Continuous monitoring for quality and safety issues, including bias detection, toxicity screening, and personally identifiable information (PII) leak prevention.

TruLens: Systematic evaluation and tracking using “feedback functions” that help you fine-tune AI behavior over time.

These tools represent a fundamental shift: from hoping your AI works correctly to proving it works correctly.

What the Experts Say: Academic and Industry Perspectives

Leading institutions and tech companies are investing heavily in LLM validation research:

MIT Sloan emphasizes that human judgment remains critical in high-stakes applications. While AI can augment decision-making, human oversight ensures accountability and catches subtle errors that automated systems might miss.

Harvard and Stanford researchers advocate for rigorous validation as non-negotiable for trustworthy AI. Stanford’s HELM (Holistic Evaluation of Language Models) framework assesses models across 42 scenarios and 7 key metrics—accuracy, fairness, robustness, toxicity, efficiency, and more.

Google, Amazon, and Meta are all investing in open-source validation tools and hybrid evaluation approaches that combine AI automation with human oversight. The consensus is clear: multidimensional evaluation, continuous monitoring, and a strong emphasis on safety and ethics are essential.

This isn’t just academic theory—it’s becoming industry standard practice.

The Challenges We’re Solving Together

Despite progress, significant challenges remain in LLM validation:

Complex Control Flows

Modern LLM applications involve intricate, repeated calls to foundation models. Tracing issues and debugging non-linear interactions requires sophisticated tools and expertise.

Evaluating Quality at Scale

What does “good” mean for an LLM output? How do you measure it consistently across thousands of interactions? Traditional metrics often fail to capture the nuances of creative or contextual responses.

Continuous Testing Requirements

Unlike traditional software with discrete testing phases, LLMs require ongoing validation. Models evolve, edge cases emerge, and performance can drift over time.

Safety and Compliance

Ensuring LLM outputs align with ethical standards, regulatory requirements, and company policies isn’t optional—it’s essential for avoiding liability and maintaining trust.

Balancing Expectations with Reality

Users expect near-perfect performance from AI, but LLMs have inherent limitations. Clear communication and well-designed applications that incorporate feedback loops are crucial.

This is where Origo’s expertise becomes invaluable.

How Origo Can Help: Your Partner in Human-Centered AI Adoption

At Origo, we understand that adopting AI isn’t just a technical challenge—it’s an organizational transformation. Many enterprises and SMEs face a critical expertise gap: they recognize AI’s potential but lack the specialized knowledge in cloud infrastructure, AI implementation, and innovation strategy to deploy it safely and effectively.

That’s exactly where we come in.

Expertise When You Need It

Don’t have a dedicated AI team? We provide the cloud, AI, and innovation expertise you need to implement validation frameworks like PromptFoo without overwhelming your existing IT resources.

Already have technical staff? We augment your team’s capabilities, providing specialized knowledge in LLM validation, prompt engineering, and AI safety practices.

Human-Centered Implementation

Our philosophy is simple: AI should augment human capabilities, not replace them. When we help you implement validation frameworks, we ensure:

- Your team remains in control: AI handles repetitive validation tasks while your people focus on strategic decisions

- Clear human oversight: Critical evaluations always involve human judgment

- Transparency and explainability: Everyone understands what the AI is doing and why

- Continuous learning: Your team builds internal expertise as we work together

Customized Solutions for Your Business

For Enterprises: We design comprehensive validation strategies that integrate with your existing CI/CD pipelines, compliance frameworks, and governance structures. Our approach ensures AI deployments meet your rigorous standards for security, reliability, and regulatory compliance.

For SMEs: We provide right-sized solutions that deliver enterprise-grade validation without enterprise-scale complexity or cost. Our implementations are practical, maintainable, and grow with your business.

End-to-End Support

Assessment and Strategy: We evaluate your current AI capabilities and design a validation roadmap aligned with your business objectives.

Implementation: We deploy and configure open-source validation frameworks like PromptFoo, customized to your specific use cases and requirements.

Training and Enablement: We empower your team to manage and evolve your AI validation practices independently.

Ongoing Optimization: We provide continuous support as your AI applications mature, ensuring validation keeps pace with innovation.

Smooth Technology Adoption

At Origo, we believe technology transitions should be seamless, not disruptive. Our human-centered approach means:

- Change management support to help your organization adapt to new AI validation practices

- Clear documentation that makes complex concepts accessible

- Hands-on training that builds genuine competence, not just awareness

- Flexible engagement models that fit your budget and timeline

The Future: Continuous Validation as a Competitive Advantage

The LLM validation landscape is evolving rapidly, and exciting developments are on the horizon:

Smarter Evaluation Metrics: Moving beyond simple accuracy to assess robustness, ethical alignment, explainability, and reasoning ability.

Adaptive Benchmarks: Tests that evolve alongside models, providing dynamic and relevant assessments.

Multimodal Validation: Comprehensive testing for AI that handles text, images, audio, and video seamlessly.

AI-Assisted Validation: LLMs helping to test other LLMs, with self-correction capabilities and automated test generation.

Real-Time Guardrails: Production monitoring that instantly detects issues like hallucinations, bias, or performance drift.

Enhanced Explainability: Tools that illuminate the “black box,” helping you understand why an LLM made a particular decision.

Ethical AI by Design: Frameworks ensuring LLMs adhere to human values, fairness principles, and regulatory requirements from the ground up.

Organizations that invest in robust validation practices today will have a significant competitive advantage tomorrow. They’ll deploy AI faster, with greater confidence, and with less risk.

Conclusion: Building Trustworthy AI Together

Validating LLMs isn’t a technical detail—it’s a fundamental requirement for building AI systems worthy of trust. As LLMs become increasingly integrated into business operations, ensuring their reliability, safety, and ethical alignment is paramount.

The power of open-source frameworks like PromptFoo lies in their ability to democratize enterprise-grade testing capabilities. They empower organizations of all sizes to build responsible AI without requiring massive internal AI teams or budgets.

But tools alone aren’t enough. Successful AI adoption requires expertise, strategy, and a human-centered approach that keeps your people at the center of the transformation.

At Origo, we’re committed to helping enterprises and SMEs navigate this journey smoothly. We bring the cloud, AI, and innovation expertise you need, combined with a philosophy that prioritizes human augmentation over replacement.

Ready to tame your AI beast? Let’s talk about how open-source validation frameworks can give your organization the confidence to innovate boldly while managing risk responsibly.

The journey to reliable, human-centered AI is a collective endeavor. With the right tools, the right expertise, and the right philosophy, your organization can harness AI’s transformative power while maintaining the human judgment, creativity, and values that define your success.

Visit us at www.origo.ec to learn more about how Origo can help you build a trustworthy AI future—together.

info@origo.ec